Releasing software with confidence without a testing team

It's far from ideal, but it can be okay...

About a year ago I started working at a local tech unicorn as a Product Manager. Their approach to delivering software was slightly different from the usual Scrum, but they had done it for around 5 years and it seemed to work. The main benefit is that it allows engineers to move often between teams and that seems to make them really happy without visible sacrifices elsewhere.

But one thing I did notice was that we had over a 1000 employees and around 5 testers. That didn’t seem so great.

The approach seemed similar to what I had seen a few years earlier at Microsoft with the introduction on “combined engineering”, where they got rid of all the testing positions and assumed engineers themselves take up testing their own code, put more focus on unit and automated testing etc.

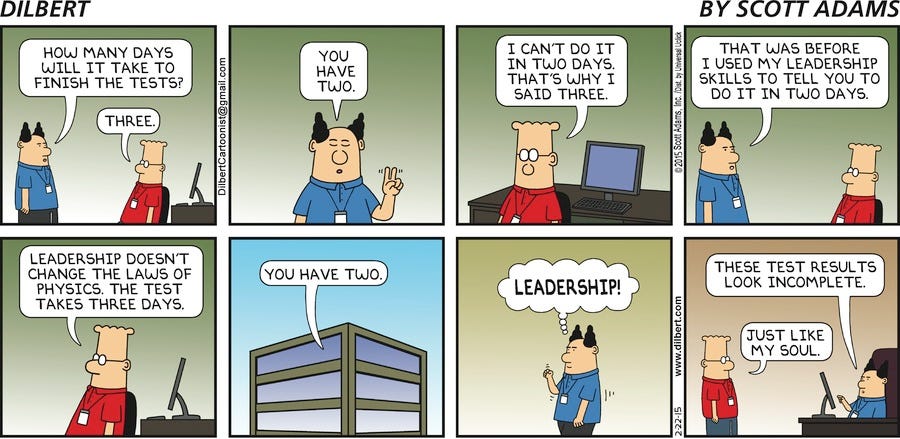

It didn’t work out though and it was quite quickly clear that the incentives for developers to try to break their own code were quite divorced from reality and in 80% of cases didn’t work (in other words 80% of engineers turned out to be not great at testing their own code).

In the end testing was mostly outsourced to India-Asia region with quite poor results, but it was better than nothing.

If you’re in a situation where you don’t have a testing team and still want to release software with some certainty, then I have a few ideas that when combined have worked in my experience.

The team must know what having no testers means

The very first thing is to make it clear to the development team that there’s very little between their code and the end user in terms of verification & testing. It’s obvious for some of them, but not for others. This in essence is what Microsoft tried to do and in isolation it’s not a working solution on its own but it’s the first step.

Team test sessions

For larger releases we’re getting the whole team together for a test session of 2-3 hours to at least cover the main scenarios in depth as much as we can.

The trick here is not to do it too often, so people would actually be excited for these sessions (seeing what are the bigger things we’re building) and it wouldn’t become a chore related to verifying bugfixes and minor changes.

The added incentive here is that even though developers end up verifying the changes and as I said most developers aren’t very good at this, in this case they aren’t trying to break their own code and there’s more of them doing it, so surfacing valid issues is significantly higher.

Rollout experiments & betas

Second, you must have a service that let’s you release new features/releases in controlled rollouts. The bigger or riskier the change, the more careful you should be in rollout. We have a few hundred thousand users, so I usually start with maximum 1% of user base and observe the feedback for 1-2 weeks before increasing the rollout to something like 10% and from there with bigger and bigger steps to a 100%.

Usually once you’re at 10% and you haven’t seen feedback about critical issues, it’s a good sign. The only caveat is that if your user base is still small, let’s say a 1000 users, then 1% to 10% range might not give you enough data points yet. In those cases I would still start low, but keep increasing the rollout more gradually instead of larger steps until you reach around 30%.

In general use you common sense and don’t rush it. In my beginning days I had a great PM who every time when we felt time pressure told us “Don’t worry about the timeline, just remember, we don’t ship shit”. And you shouldn’t either.

Test environments & dogfooding

At Microsoft dogfooding was huge. For those unfamiliar with the term it just means using the alpha/beta releases in-house before releasing to public/live (or even to Beta users). This can be a good strategy for mid to larger size companies, 50 people or more.

The basis of dogfooding is having some form of test environments. In some cases it makes sense to even break this down to test-env, pre-production env and live env, but having a basic setup in place ensures that you have some way to test things out without the developer working on it showing you in their local environment. It’s a complete must have.

Again dogfooding won’t work well in all product organizations, for example if you work on some specific medical software, then it will be almost impossible to dogfood because your engineers and colleagues aren’t doctors. However in most consumer software cases (which is what Microsoft is mostly known for) it can work wonders.

There is obviously some time investment needed in building up to initial dogfooding and feedback collection system, but in the medium to long term it’s an investment well worth it. The other thing to mention, like in the section above, don’t rush it. You need to give the “dogs” enough time to “digest” the new stuff you’re about to ship. If you leave 3 days for dogfooding and then ship, then you’re missing most of the potential benefits.

Outsource Testing but for Free

This might be the most controversial of the points, that I haven’t heard often from other companies but in our case it works quite well. We have some partners who sell our software and get a cut based on the revenue they bring us. They also often serve as first line of support and consultation for those clients they referred to help with correct setup, best practices of use and troubleshooting.

These resellers know our clients extremely well and the product itself extremely well. We have set up test account for the majority of them which enables them to try out new features before they hit the market, which they love. But also it gives us valuable feedback in terms of both UI/UX insights as well as potential bugs. Our partners are incentivised to help us, if we do well, they do well.

Having these kinds of affiliate or partnership deals are becoming more and more common, specifically for SaaS type of businesses, so I predict this will become more and more relevant over the years.

Summary

Hopefully these ideas were helpful. Maybe you can’t use all of them, maybe you have to modify them slightly to work for your specific product, circumstances and team setup, but I believe they should be quite universally applicable.

The trick is not to pick one, they only work in combination. And they will never replace having actual dedicated (manual) testers as a part of the team, who know the product in depth.

It’s just a placeholder approach until you manage to convince your upper management on the need of proper dedicated testers.