Testing with developers - The right and wrong way

I’ve talked in one of my previous posts about my roughly 5 years at Microsoft. During that time, Satya took the helm and overall they seemed to making all the right moves and going in the right direction.

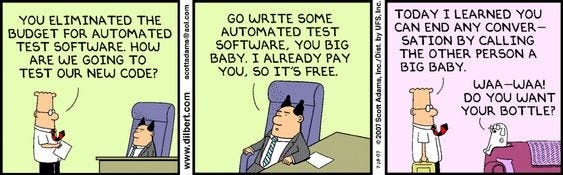

Just a reminder that previous direction looked something like this:

While things were definitely going in the right direction generally speaking, there was one major mistake they’ve made during this time, which was getting rid of the tester role in the company.

This didn’t just end up damaging the quality of Microsoft’s software, but was seen by many others as the new direction, where developers just test their own code.

The wrong way

It became obvious quite quickly that adding a new line to developers duties “… also test your own code and features before shipping”, didn’t keep the bar of the quality too high compared to where it had been historically.

My assumption is that the first reason for this has to do with incorrect incentives. Dedicated testers are being paid to find defects in code. Developers are being paid to deliver code that preferably have no to little defects. With this change, they’re effectively being told, here’s your baby, no try to go hurt it. In most cases this is going to be an uphill battle.

The other part I believe has to do with the fact that most engineers believe they are already doing it, they think of edge cases and they do test their code before putting it to master or live. Based on what I’ve seen after going from having testers to no testers, developers adjust their workflow very little and amount of time spent on testing will remain roughly where it was before.

A better way

Currently we’re also at about a ratio of 1 tester per 100 employees, so we have had to get creative and try out a few things. Some of these I mentioned in the previous article, so I won’t repeat those here, but what has worked quite well for us is not dropping all the extra responsibilities related to testing just to developers, but holding testing sessions with whole cross-functional team or the part that has been working on the new functionality under test. In our case this usually involves engineers + design + product manager.

Each of these roles bring a unique perspective that’s helpful for preparing the session (i.e. test cases/scenarios) as well as doing the actual testing around them:

engineers - have best insight into potential weak areas (where they know business logic is complex and possibly prone to areas) and other potential edge cases (as they have a “white box” view of the code, it’s not something abstract

designers - help reviewing the use cases from usability and UX perspective. What we often see is that the intended functionality is there and it works, but it has some rough edges that makes it not so pleasant to use, specifically this is important in heavily used areas of the application, where it can have the “pebble in the shoe effect” for many users

product managers - are helpful to bring the end user perspective, understanding the “jobs to be done” aspect of the new functionalities and can help identifying potential workflow issues that could also be overlooked if just focusing on binary approach of “the feature works or it doesn’t”

In our implementation we find one responsible person to put together the initial test plan (test scenarios) draft document. Then share it with other participants for them to share improvements, missing use cases etc and then hold the actual test session (or sessions).

For the session itself the most important point, which might not be obvious is that all participants should spend time to test all the cases. The reason is the same as mention above - different roles bring different perspectives and can surface very different kinds of bugs to be fixed.

I don’t think this approach is ideal. Personally I still haven’t seen something that would successfully replace a dedicated in house testing team. But in real world scenarios where often this isn’t an option, it seems like a solution that could work for majority of the teams to try to help the quality of their releases up.

Until next time!

-R